When it comes to choosing the right laptop model for the job, we’re usually concerned about 2 things: speed and cost. We don’t want to buy our users a Ferrari when their job requires a speed limit of 55 mph. But in the world of Electric Vehicles, shouldn’t we be considering how long the battery lasts? (Sorry, I had to keep the car analogy going…)

Sure, there are theoretical numbers vendors publish on how long a battery should last, but these numbers aren’t based on the actual application workloads your users are doing. Well, while you’re performing your Continuous Test and simulating your users’ application workflows, how about we layer in battery utilization? Thanks to one of my favorite things ever, Session Metrics, that’s super easy to accomplish!

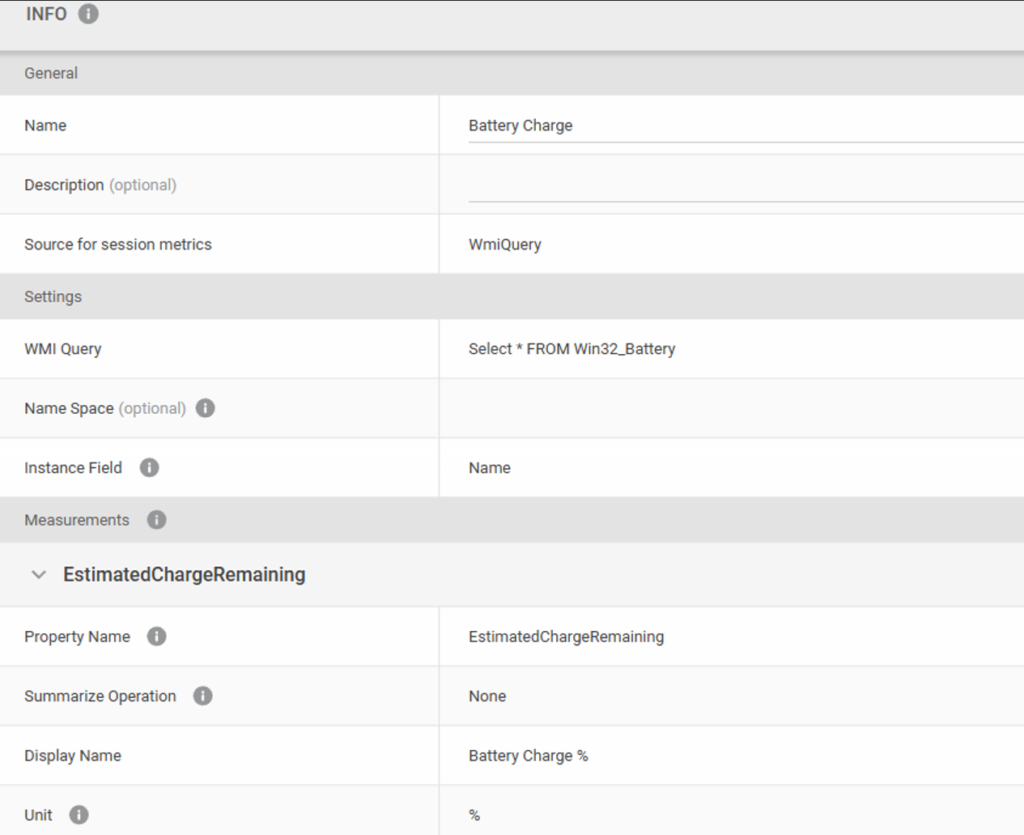

Head to Session Metrics and create a new one based on WMI. There’s a WMI class called Win32_Battery that reports our battery statistics, so we’ll use the query Select * FROM Win32_Battery. We’re also looking for the property EstimatedChargeRemaining. Once you have everything filled out, it should look like this:

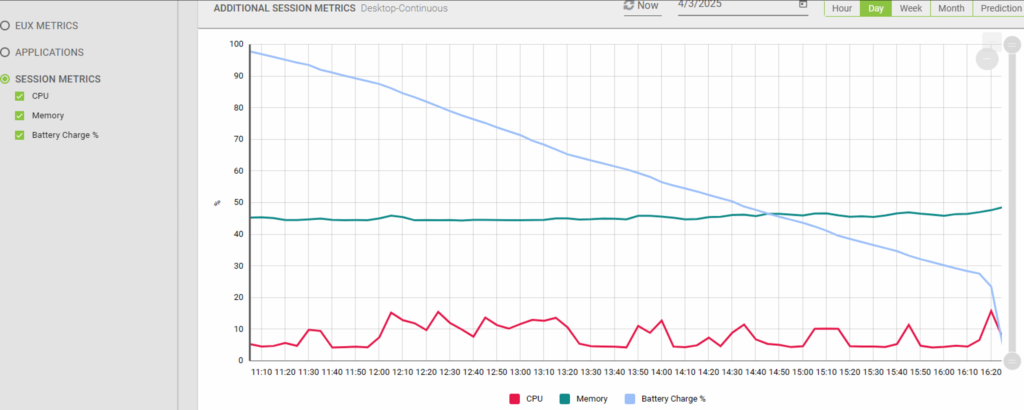

Now, head to the Groups tab underneath Session Metrics. Add it to the appropriate group that is associated with your laptop’s Continuous Test. Check it out, you can now add Battery Charge % into your charts!

The funny thing is – my laptop vendor’s spec sheet said the battery life should last around 14 hours of continuous use. While 7 hours is plenty impressive, that’s still half of what I was told I should get, even on optimized battery settings! Not to mention my CPU never went above 20%… And look at the huge dive after I reached 25%!

This is just another example that the “generic” numbers our vendors give us can never truly reflect our own environments. Without simulating user behavior and performing the ACTUAL application workloads an organization’s users are doing, there’s really no way to judge how long my laptop battery is going to last. Or how many users I can fit on an AVD multi-session instance. Or what my vCPU/pCPU ratio should be for my on-prem VDI deployments.

Login Enterprise takes the guesswork out of these decisions by running real user workloads in your own environment, while providing actual data as a result — not just vendor estimates. This results in confident decisions, optimized performance, and fewer surprises when deploying new hardware or changes.

So go ahead – unplug that laptop and layer this metric into your next test. You might be surprised. Happy monitoring!