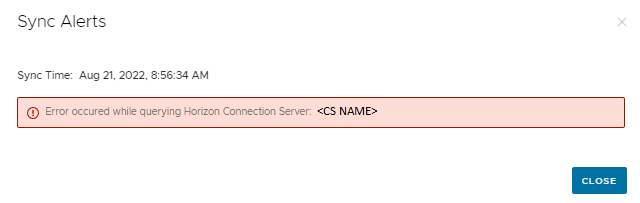

Recently I came across an issue where Workspace ONE Access syncs suddenly began failing after performing Horizon upgrades, specifically from 7.13 to 2111. Of course, this did not happen when upgrading the test environment. When looking at the Access Admin Console, you see a generic error when pulling up the failed sync information:

“Error occurred while querying Horizon Connection Server” followed by the CS name configured for the first pod in the CPA.

So, of course, head over to the top Connector configured in your app collection sync. This connector takes sync priority as long as it is online, so it will have the relevant logs. The Connector logs are located at <install directory>\Virtual App Service\logs\eis-service.log and log in GMT time. A few errors to look out for on this particular scenario:

ERROR <CONNECTOR NAME>:eis (Sync-Task-22) [WS1 TENANT; GUID] com.vmware.vidm.eis.sync.SyncService - Sync failed for profile xxx com.vmware.vidm.eis.view.exception.ViewApiClientException: eis.view.query.error

...

Caused by: com.vmware.vim.vmomi.client.exception.ConnectionException: java.net.SocketTimeoutException: Read timed out

...

Caused by: com.vmware.vim.vmomi.client.exception.ConnectionException: java.net.SocketTimeoutException: Read timed outBeforehand, you will actually see an entire list of Global Entitlements/apps getting found, meaning the Connector is successfully communicating with the Horizon API. No SSL errors, etc. Then followed by the sync summary:

Sync summary for the tenant <TENANT NAME>, profile xxx and Integration Type View :

Pull ViewApp From WorkspaceOne,Count=123 ,Completed in 0.891 Seconds,Result=Success

...

Pull ViewApp From View,Count=123 ,Completed in 23.123 Seconds,Result=Success

Sync Operation,Completed in 339.447 Seconds,Result=Failure

Pull Global ViewApp From View,Completed in 0.0 Seconds,Result=Failure

Total Fetch And Diff Operation For ViewApp,Completed in 0.0 Seconds,Result=FailureSo, from the logs, we can see that it successfully pulled the apps, but something is likely hitting a timeout. Notice that the sync operation quits around 340~ seconds, a little after our default timeout at 300 seconds! There is actually a configuration file in Connector 21.08.x that controls this timeout. Head over to Virtual App Service\conf\application.properties. As always, take a backup of this file and modify the following value:

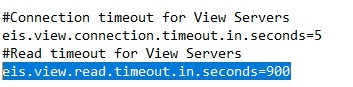

eis.view.read.timeout.in.seconds=300Jack it up to something like 900 seconds and restart the virtual app sync service. Run another sync, and hopefully that resolves the issue!

In summary, I know there were some recent API changes to help with previous 7.x versions max’ing out CPU on the REST API. If I had to guess, there is likely a new throttling mechanism that may slow down results from API calls on newer Horizon versions. This could be a factor on any 7.x > 8.x upgrades. Because we did not see it in the test environment, it is likely only a factor in environments with 100+ desktops/apps/global entitlements. Hope this helps in your upgrade/migration efforts out of Horizon 7!